Red Team Diaries: Cyber

SE01 E02

(To protect the identities of those involved, this article is a dramatization of events taken from a mixture of engagements.)

Read more Red Team Diaries:

Checking-in

I had just stolen two laptops from my client’s office. After days of research and surveillance, I had made it past their physical security with relative ease. My next task was to tell the client about the breach.

I am a red-teamer, hired to test clients’ readiness to prevent, detect, and respond to targeted cyber attacks. Stealing those laptops was phase one of my work; the physical prelude to the true test of cyber security.

My client wanted to understand the severity of the risk should a malicious actor acquire one of their laptops. They were not only worried about laptops being stolen, but also about laptops being lost in public places like trains and taxis (which happens roughly as often as true thefts).

Phase one had gone well. I updated the client’s “white team” (the internal stakeholders refereeing the engagement) over the agreed encrypted channel. This is a critical part of the red team service, which is all about collaboration, communication, and education. A red team engagement needs to be an authentic test, but it should never be unsafe or add risk to the business. I always contact white teams regularly and at a frequency they are happy with.

I was about to start phase two: penetrating the client’s computer network to steal their high-value trading algorithms and workflows.

The laptop whisperer

Double shot black coffee. Really black. So black, light cannot escape the cup. This is the best way to start.

Encryption

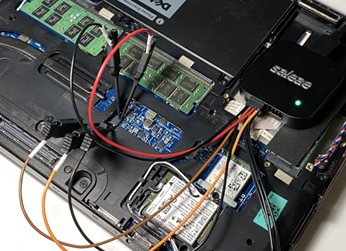

Back in my own office, I flipped the contractor's laptop upside down and opened the back to get to the Trusted Platform Module (TPM) chip.

Many organizations use full disk encryption to secure their devices. This is a good move, but users are often lazy—they prefer to skip the authentication step that make this setup secure.

When an encrypted computer equipped with a TPM chip starts, the BIOS chip in the computer asks the TPM chip "got a decryption key for me?" and the TPM chip complies and sends the key.

Users should be required to put in a PIN or password before the key is sent from the TPM chip to the BIOS chip. However, companies will often disable that requirement because employees will complain if they have to punch in a PIN every time they start the computer. This lack of authentication is a golden ticket for attackers.

If you look in the right places on the laptop’s circuitry, you can eavesdrop on the communication of the TPM chip transmitting the decryption key across the motherboard. All you need is the right equipment.

Recommendation

Encryption on its own is not enough to secure a laptop. You might have a decent lock on your front door, but it doesn't really matter if the door unlocks automatically or if you keep the key under the doormat.

If a laptop is stolen, best practice is to assume that the security on it is going to be breached. Perform threat modeling to understand what the impacts of that would be for your organization.

Figure 1: Logic sniffer

I used a USB device, a ‘logic sniffer’, the size of a credit card. It records activity passing between the BIOS chip and the TPM chip on the motherboard. After the eavesdropping device located the key, I disconnected it and began the recovery process using software written by one of my colleagues.

Backdoor

My next priority was to install a backdoor on the main machine so that I would still be able to access it, even if someone restarted the computer, ran software updates, or changed the password. This is known as maintaining persistence. This backdoor would work the moment the laptop was powered on and connected to the client’s VPN infrastructure.

Recommendation

Backdoors can be detected by a blue team, but if the attacker has full access to the complete disk, they tamper with things that are not normally changeable. Blue teams often forget to look in these places because they assume the end-user does not have those privileges.

Backdoors are usually subtle and dig themselves into existing software or house-keeping scripts so as to ‘LoL’ or ‘Live Off the Land’, as we say in offensive security terminology.

Administrator

I already knew that the client’s remote workers were automatically connected to the VPN when logging on to their device, so I launched my own custom code that I had hidden inside a common tool that runs at startup to piggy-back onto the VPN connection, which allowed me to connect with the client’s main corporate network.

Recommendation

Users should have to log in or otherwise authenticate every time they connect to a VPN or access a secure area. This will annoy them, but it is more secure.

As far as the laptop was concerned, I was the HR contractor from whom I had stolen the laptop. A bit more than that, actually: with my full, backdoored access and the laptop in my possession, I'd achieved administrator-level access to the laptop’s hard drive. Despite this, without the user’s main password (which was stored in Active Directory), I could not reach my objective in the virtualized application environment that housed the client’s critical intellectual property.

Password

After some searching and another coffee, I found a local HR application that I suspected the original owner of the laptop would have used regularly. I was right, and he had even saved his password to autofill. He seemed like the type to reuse passwords across applications, so I thought that, if I could recover it, this one could be used to unlock multiple accounts.

Recommendation

In general the option to autofill passwords should be disabled, but this is hard to implement without some form of centralized credential management service.

Companies need to make the trade-off between users mistyping their passwords and being locked out of their devices versus having cached credentials.

There’s a small window of time during which applications process passwords in a readable format. For an attacker in possession of a victim’s device, this is an opportunity to snatch the information without brute force.

I loaded the HR application on a second computer so that I could work with my own virtualized setup, including certain useful programs and files. As soon as the HR application tried to read the cached password and decode it in memory, I paused the process, freezing the flow of data.

The decoded password was now somewhere in the HR application memory space; all I had to do was find it. I made a copy of the working memory and saved it to file. The client had a legacy 8-character password policy, so I sieved through the data, concentrating on any line of data that was 8 characters or more. In minutes, I saw it: Superm4n.

I could see dozens of applications through the virtualized application environment. After a few attempts, I logged into one with the password, then broke out of the virtualized environment onto the underlying operating system.

Recommendation

Passphrases that are long and unique are much more secure than shorter passwords that are artificially complex (such as by having capital letters and symbols). A passphrase like ’medievalstrawberryatthemovies’ is uncrackable (so long as the words in the phrase are chosen randomly).

Another benefit is that passphrases like this do not have to be rotated every three months, which means that users do not have to remember new passwords every few months (which can result in them writing passwords down or storing them somewhere on their device).

DLL side-loading

After a night’s rest, I returned to work. I was under the proverbial floorboards of the virtualized environment; I could see which applications were being used, as well as by whom and from where. Employees were busy at work across different regions and time zones, all accessing different applications. I didn’t have Active Directory privileges to access the restricted network housing those critical assets, so I needed to use lateral movement and pivot to another account that did have access.

Software is modular; it relies on different software libraries to perform tasks. A risk arises when applications start looking for external files in locations that the end-user and thus also an attacker can access and change. If an attacker controls the location where these files are stored, they can respond to this request, presenting whatever file the application is expecting alongside malicious code. This type of attack is known as DLL side-loading. The attacker places a spoofed malicious DLL file in a common directory that usually contains system libraries. This triggers the operating system to load the attacker's file instead of the legitimate one.

Recommendation

To defend against DDL Side-loading, limit end-user write privileges to the relevant folders and have detection and response for directories where the end-user (and thus a would-be attacker) would be trying to manipulate files or introduce new files

Looking across the system, I found six users with access to applications that stored their temporary files in a location that my account could also access. All six could be abused using DLL side-loading. I sprinkled back-doored software libraries in the target locations and, eventually, a file request came through from one user. Now I also have access to that person’s user account and all the files, privileges and access they have access to.

Bullseye

I stole a password from the memory of a local business application, re-used it against the virtualized environment, and broke out of a sandbox application to obtain the access needed to reach the target network. I back-doored a number of utilities in use by the trading team and basically had the trading team open the door with the access needed to get to the file shares holding the trading algorithms.

One of my colleagues passed by my desk, leaving for the day. He asked me if I had found a way in.

“In, out, and everything in between,” I said, smiling.

Final actions

I’d been at my desk for around 48 hours. The finish line was in sight, but I didn’t cross it. First, I had to list the files and other assets I had access to and take screenshots of my privileges to prove to the client that we had reached the critical post-exploitation stage of the attack. I also exfiltrated example source code files and selected copies of the development environment and its key assets, but only after checking in with the customer and proving that I had access and control, and vetting the files for exfiltration so as to not introduce any unnecessary risk.

In a second browser window I notified the white team of the engagement status: complete, objective achieved. It was time to make another ultra-black coffee before writing the client’s report; I wanted to start when the engagement was still fresh in my mind. The report contained an executive summary of the engagement and including the results as far as observations and risk, the technical details of the attack narrative, as well as detailed mitigation paths and suggestions for additional controls to ensure that next time things are a little bit harder and new processes and infrastructure can be tested.

The rest of my team had dispersed for the weekend. The office was silent. All the motion-activated lights were off, except the ones above my desk.

Read the next installment of this Red Team series ‘Episode 3 – Post engagement’ here.