Generative AI Security

Are you planning or developing GenAI-powered solutions, or already deploying these integrations or custom solutions?

We can help you identify and address potential cyber risks every step of the way.

Embrace AI while Mitigating Security Risks

Artificial Intelligence is rapidly transforming industries, and organizations are increasingly integrating large language models (LLMs) into their services and products. Whether using off-the-shelf models, customizing pre-trained solutions, or developing proprietary AI, the transformative power of these technologies is undeniable.

While AI should be recognized and embraced as a game-changer for business innovation, it's essential to be aware of the potential cyber security risks beyond the hype.

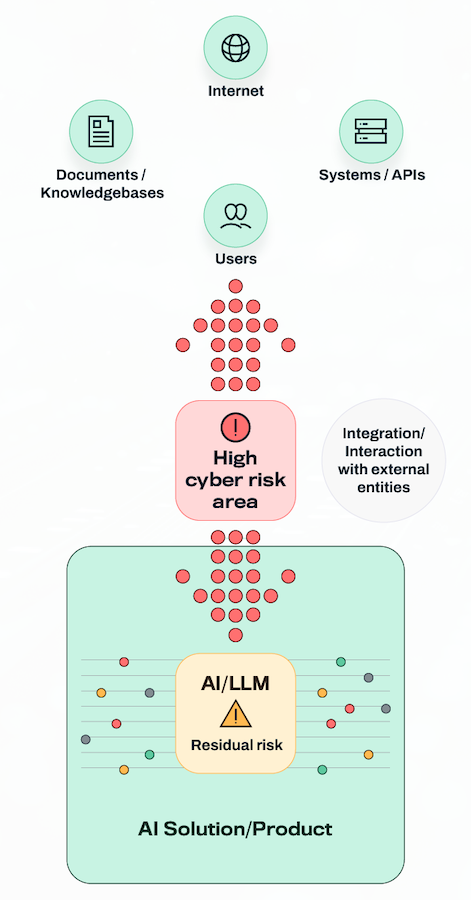

We see the majority of cyber security risks stemming from how AI models are integrated into systems and workflows rather than from the models themselves.

Failing to address these risks can expose your organization to various threats, including data breaches, unauthorized access, and compliance violations.

We can help you address the practical risks associated with integrating AI into enterprise systems and workflows. As a leading cyber security and pentesting company, we have extensive experience in helping organizations navigate the complexities of adopting new technologies such as GenAI and LLMs.

Common Pitfalls in the Use of GenAI

Practical risks associated with AI don't exist in isolation but are mostly related to the context in which the organization is using it. When building GenAI and LLM integrations, it's crucial to consider the potential security risks and implement robust safeguards from the outset.

We have identified that the most common pitfalls associated with the use of AI for businesses, especially from a security perspective, include:

Governance, Risk and Threat Modelling for AI

Our services to support you in the planning phase.

Implementation and Integration of AI solutions

Our services to support you in the implementation phase.

We Can Help

WithSecure™ is the trusted cyber security partner and industry-accredited, global provider of cyber security assurance services, with over 30 years of experience.

We understand the unique challenges that arise during the development and implementation of AI-powered solutions. That's why we offer comprehensive cyber security consulting services to support you every step of the way.

Our experienced and specialized team can help your organization leverage the full potential of AI technology while maintaining a resilient and secure infrastructure.

Contact us to find out how we can support your organization in the secure deployment of GenAI and LLMs.

Want to talk in more detail?

Complete the form, and we'll be in touch as soon as possible.

Case Studies

Securing an LLM-Powered Customer Support Agent for a Tech Start-up

Client's Challenge

A tech start-up was developing an LLM-powered virtual agent to automate customer support experience for organisations. The agent would have access to customer accounts and the ability to perform operations like updating address details. The client's primary concern was ensuring the security and privacy of customer data while maintaining the agent's functionality and effectiveness.

Outcome

By providing expert guidance and recommendations, we helped the tech start-up implement robust security measures for their LLM-powered customer support agent. The redesigned API and guardrail pipelines, implemented by the client based on our advice, ensured strong access controls and protection against malicious prompt injection attempts.

Customer data remained secure, and the agent could function effectively without compromising privacy or exposing the client to potential data breaches or unauthorized access.

Our Solution

Our team conducted a comprehensive security assessment of the client's LLM integration, including a thorough evaluation of the agent's tools and APIs. We identified a critical vulnerability wherein the API allowed the LLM to specify the userID, opening the door for prompt injection or jailbreaking attacks. Malicious actors could potentially force the agent to invoke the API with a different userID, enabling unauthorized access and modification of confidential information across customer accounts.

To mitigate this risk, we advised the client on redesigning the API's access controls. We recommended removing the userID parameter from the API and supplementing it as part of a secure session management system. Additionally, we guided the client in integrating LLM guardrail pipelines that inspect untrusted input and limit the success rate of jailbreak or prompt injection attacks.

AI Security Strategy and Risk/Threat Modeling for a Large Enterprise

Client's Challenge

A large multinational corporation aimed to enhance their workforce's capabilities by adopting GenAI solutions, both off-the-shelf productivity tools and integration of proprietary LLMs via access to external, 3rd party APIs. They sought guidance on evaluating the security implications of these GenAI implementations and establishing best practices for future GenAI projects. Given the dynamic nature of AI solutions, the client recognized the need for a tailored AI security strategy that complements traditional cybersecurity methods.

Outcome

By engaging in this comprehensive AI security strategy, the client gained a deep understanding of the potential risks and threats associated with their AI implementations. Equipped with our risk and threat modeling insights, they could make informed decisions and implement appropriate security controls to mitigate these risks effectively.

Moreover, the AI security checklist provided a robust framework for future AI projects, ensuring a consistent and proactive approach to addressing security concerns from the outset. This empowered the client to leverage the transformative power of AI while maintaining the highest levels of security and protecting their organization's critical assets and data.

Our Solution

We engaged with the client through a multi-phased approach, combining risk modeling and threat modeling in the context of AI to create a holistic view of the risks associated with leveraging API-based AI solutions for the organization.

Phase 1: Risk Modeling Our team conducted a comprehensive risk assessment, identifying potential vulnerabilities and threats specific to the organization's AI implementation. We evaluated factors such as data privacy, model biases, transparency, and integration with existing systems.

Phase 2: Threat Modeling Building upon the risk assessment, we performed threat modeling exercises to understand the potential attack vectors and scenarios that malicious actors could exploit within the AI ecosystem. This included analyzing risks related to prompt injection, model hijacking, and insecure API integrations.

Phase 3: AI Security Checklist Based on our findings from the risk and threat modeling phases, we developed a tailored AI security checklist to guide the client in implementing robust security measures for future API-based AI projects. This checklist encompassed best practices for secure data handling, model validation, API access controls, monitoring, and incident response.